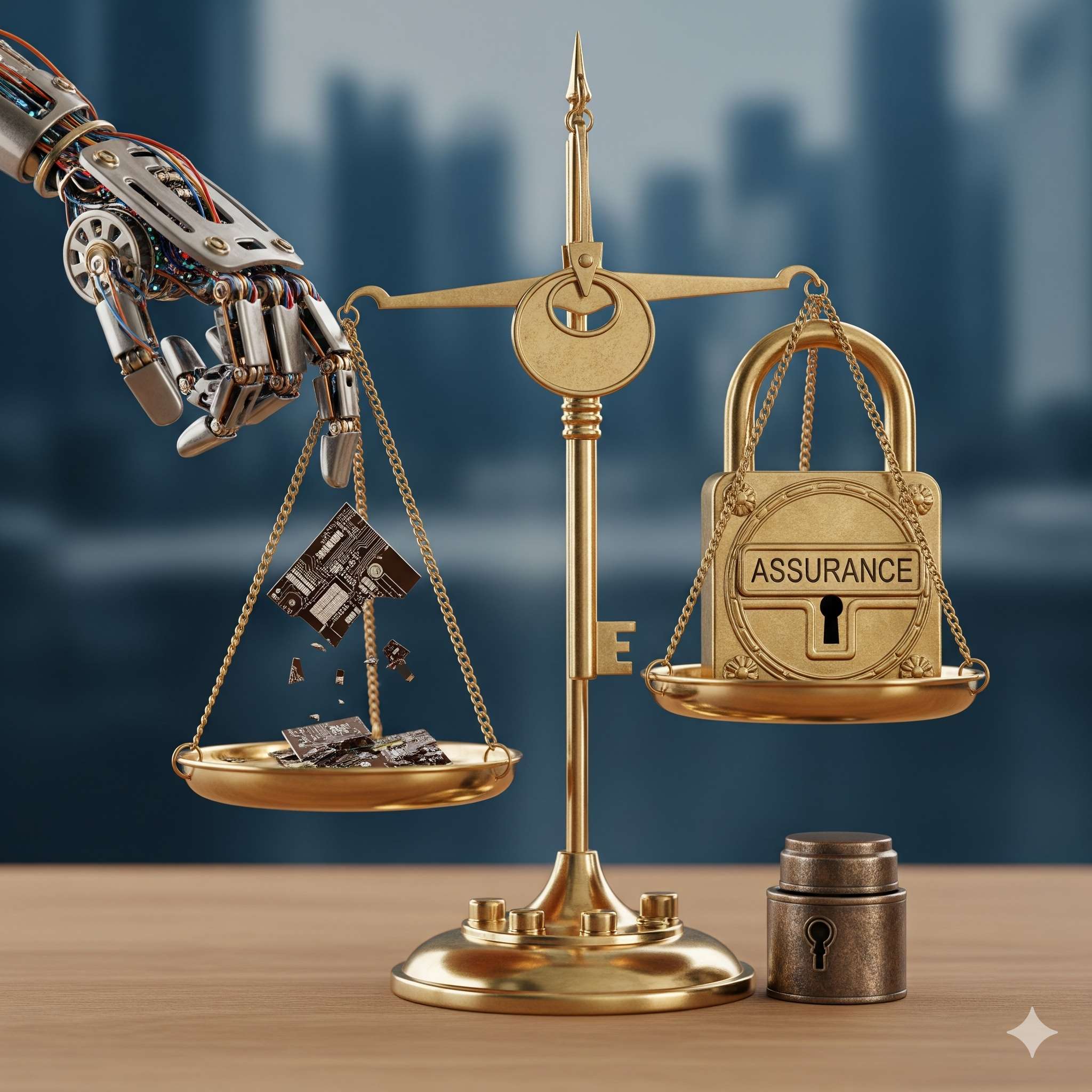

AI Safety Theater Today, Red Tape Tomorrow: How Overconfidence Invites Regulation

Industries that loudly promise "self-regulation" and "responsible AI" often get the opposite of what they want: heavy-handed government oversight. History shows that regulatory backlash follows predictable patterns.

The regulatory backlash cycle

- Phase 1: Industry promises self-regulation and "responsible innovation."

- Phase 2: High-profile failures expose gaps between promises and reality.

- Phase 3: Regulators impose strict rules, often overcorrecting for past failures.

Historical patterns that predict AI's future

- Financial crisis → Dodd-Frank — banks' self-regulation promises led to comprehensive federal oversight.

- Boeing 737 MAX → FAA tightening — industry self-certification resulted in stricter government controls.

- Social media privacy → GDPR/CCPA — "we'll police ourselves" became mandatory compliance frameworks.

Current AI safety theater warning signs

Scenario A: AI companies publish "ethics principles" while deploying systems with known bias issues. When discrimination lawsuits multiply, regulators may mandate algorithmic auditing requirements.

Scenario B: Healthcare AI vendors promise "human oversight" but automate most decisions. After patient safety incidents, regulators could require mandatory human review for all AI medical recommendations.

Scenario C: Hiring AI tools claim "bias-free" recruiting while perpetuating discrimination. EEOC may respond with prescriptive AI testing requirements and mandatory disclosure rules.

Why overconfidence accelerates regulation

- Public trust erosion — when "safe AI" claims prove false, voters demand government action.

- Regulatory credibility — agencies must show they're protecting the public after industry failures.

- Political pressure — lawmakers face pressure to "do something" when self-regulation fails visibly.

What stricter AI regulation might look like

Based on historical patterns, expect potential requirements like:

- Mandatory third-party audits for AI systems in sensitive applications

- Algorithmic transparency requirements and explainability standards

- Liability frameworks that make companies responsible for AI decisions

- Licensing requirements for AI developers and deployers

Insurance: preparing for regulatory changes

- Regulatory coverage — some policies cover costs of complying with new regulations.

- Retroactive liability — new rules may create liability for past AI decisions.

- Compliance costs — stricter oversight means higher operational expenses → learn more about AI insurance considerations.

Five questions to prepare for regulatory backlash

- Are we making AI safety claims we can't substantiate under scrutiny?

- What would mandatory third-party audits reveal about our AI systems?

- How would new transparency requirements affect our competitive advantage?

- Are we prepared for potential retroactive liability for current AI decisions?

- Does our insurance cover regulatory compliance costs and new liability frameworks? See our 5 questions to ask your insurer.

No email required — direct download available.

Stay ahead of regulatory backlash

Start with our free 10-minute AI preflight check to assess your regulatory risk exposure, then get the complete AI Risk Playbook for frameworks that prevent costly compliance surprises.